Brainwaves and Beats: Using EEG to Understand Music and Speech Processing

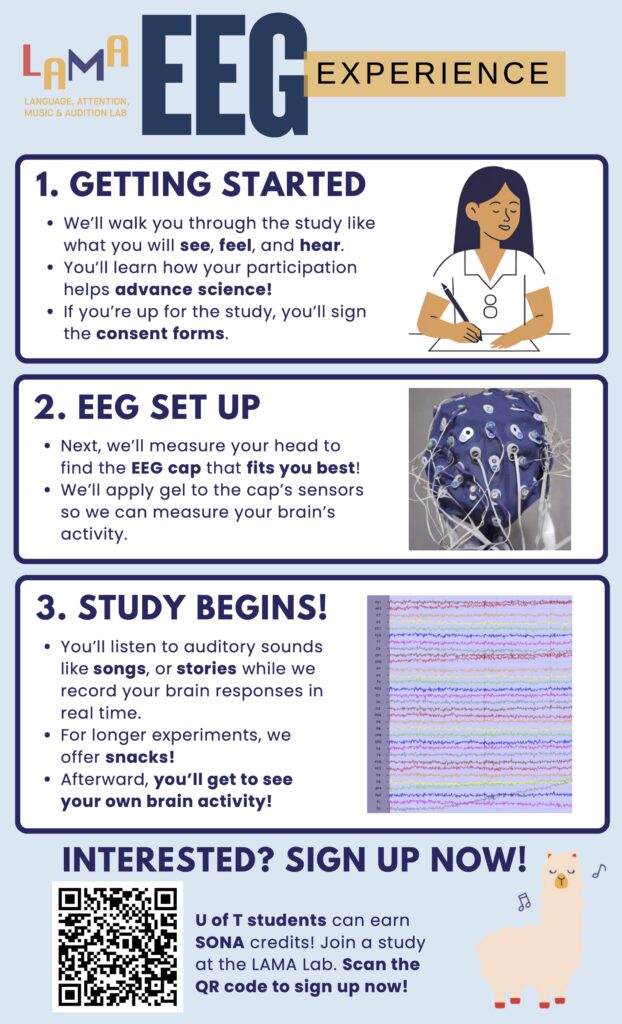

Have you ever wondered what it would be like to participate in a brain study?

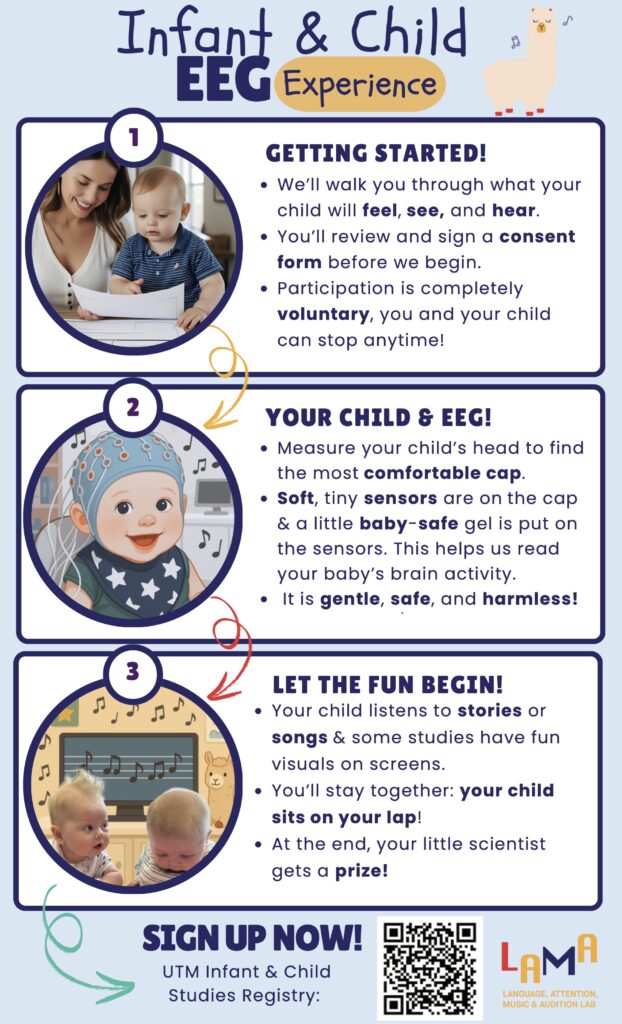

Curious about what it might be like for your child to be part of a brain study?

Interested in how music and language are processed in the brain?

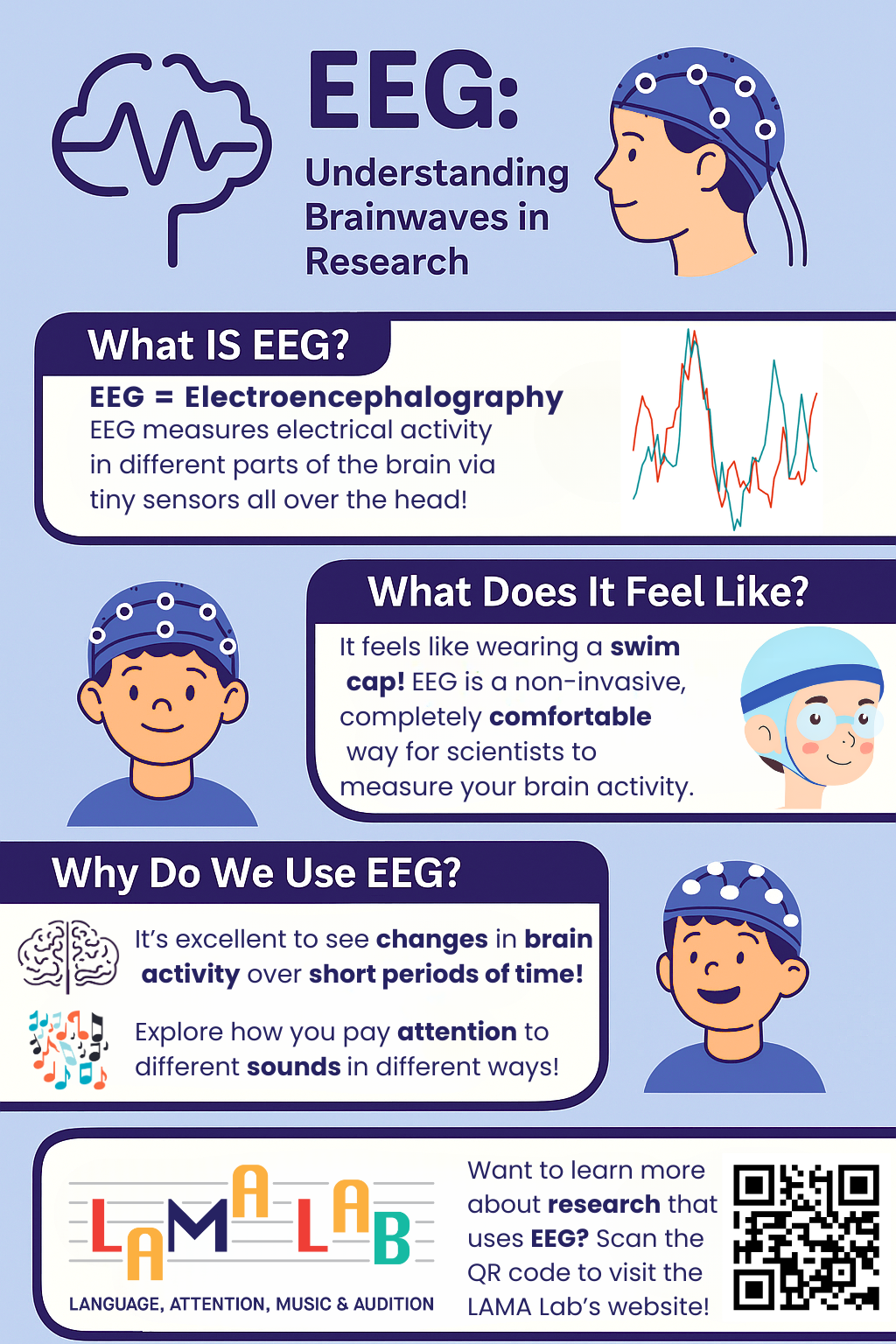

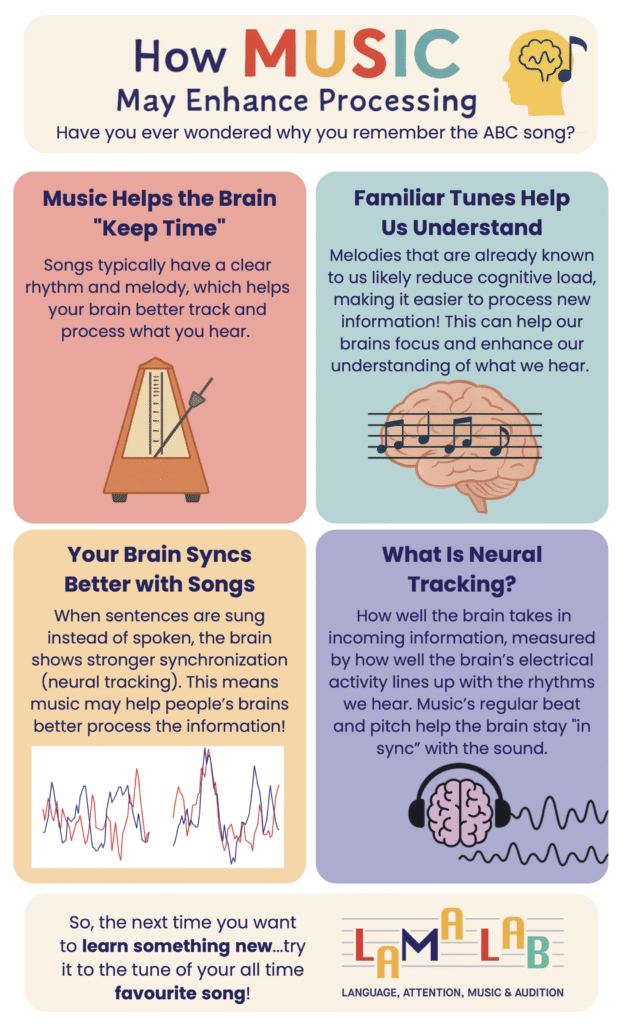

Research at the Language Attention Music and Audition (LAMA) Lab uses EEG (electroencephalography) technology to explore how our brains respond to language, sounds, and songs.

Through non-invasive brain recordings, the LAMA Lab is discovering how familiar melodies might actually help children better understand speech and language. Music, it turns out, could be a powerful tool for boosting brain processing!

But EEG can sound a little intimidating … especially to potential participants or parents considering participation for their children.

That’s why I’ve created a set of easy-to-follow infographics that break everything down. Whether you’re a parent curious about your child’s potential participation, an adult interested in volunteering, or someone who just loves learning about the brain, these graphics are for you!

Who This Is For

These infographics were created for research participants, caregivers, students, and the broader community (students & community members in health, psychology, and education).

Why Knowledge Translation?

Bridge the gap between researchers and the public, especially families who are often asked to participate in studies.

Empower parents with information so they feel confident saying yes (or no!) to participation.

Bust myths around brain research and EEG (it’s not invasive or scary!)

Highlight benefits to both science and participants, your contributions can make a difference in what we know about the brain!

Make science equitable by removing jargon and making research feel familiar, fun, and valuable

Acknowledgements

This project was created as part of the Knowledge Translation (KT) course at the University of Toronto Mississauga. Thank you to the course instructor, teaching assistant, and the LAMA Lab for their support and guidance throughout the process.

All images and graphics used in this project were sourced from the LAMA Lab or AI-generated.

References

Vanden Bosch der Nederlanden, C. M., Joanisse, M. F., & Grahn, J. A. (2020). Music as a scaffold for listening to speech: Better neural phase-locking to song than speech. NeuroImage, 214, Article 116767. https://doi.org/10.1016/j.neuroimage.2020.116767

Vanden Bosch der Nederlanden, C. M., Joanisse, M. F., Grahn, J. A., Snijders, T. M., & Schoffelen, J.-M. (2022). Familiarity modulates neural tracking of sung and spoken utterances. NeuroImage, 252, 1–11. https://doi.org/10.1016/j.neuroimage.2022.119049